40 machine learning noisy labels

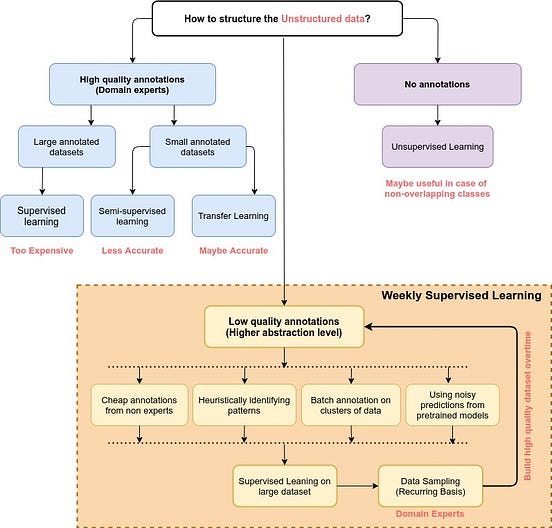

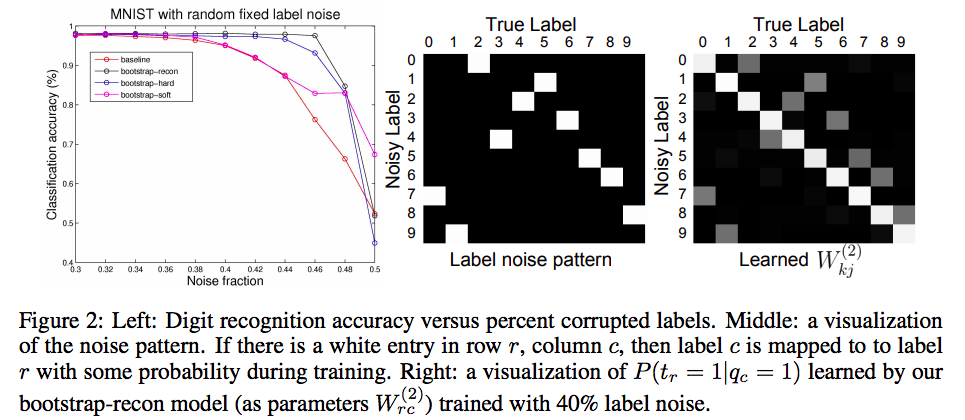

› pmc › articlesPreparing Medical Imaging Data for Machine Learning - PMC Feb 18, 2020 · Fully annotated data sets are needed for supervised learning, whereas semisupervised learning uses a combination of annotated and unannotated images to train an algorithm (67,68). Semisupervised learning may allow for a limited number of annotated cases; however, large data sets of unannotated images are still needed. Data Noise and Label Noise in Machine Learning Asymmetric Label Noise All Labels Randomly chosen α% of all labels i are switched to label i + 1, or to 0 for maximum i (see Figure 3). This follows the real-world scenario that labels are randomly corrupted, as also the order of labels in datasets is random [6]. 3 — Own image: asymmetric label noise Asymmetric Label Noise Single Label

– Toronto Machine Learning His work on Multitask Learning helped create interest in a subfield of machine learning called Transfer Learning. Rich received an NSF CAREER Award in 2004 (for Meta Clustering), best paper awards in 2005 (with Alex Niculescu-Mizil), 2007 (with Daria Sorokina), and 2014 (with Todd Kulesza, Saleema Amershi, Danyel Fisher, and Denis Charles), and ...

Machine learning noisy labels

How to Improve Deep Learning Model Robustness by Adding Noise 4. # import noise layer. from keras.layers import GaussianNoise. # define noise layer. layer = GaussianNoise(0.1) The output of the layer will have the same shape as the input, with the only modification being the addition of noise to the values. Data fusing and joint training for learning with noisy labels Abstract. It is well known that deep learning depends on a large amount of clean data. Because of high annotation cost, various methods have been devoted to annotating the data automatically. However, a larger number of the noisy labels are generated in the datasets, which is a challenging problem. In this paper, we propose a new method for ... PDF Learning with Noisy Labels - NeurIPS The theoretical machine learning community has also investigated the problem of learning from noisy labels. Soon after the introduction of the noise-freePAC model, Angluin and Laird [1988] proposed the random classification noise (RCN) model where each label is flipped independently with some probability ρ∈[0,1/2).

Machine learning noisy labels. subeeshvasu/Awesome-Learning-with-Label-Noise - GitHub 2021-IJCAI - Towards Understanding Deep Learning from Noisy Labels with Small-Loss Criterion. 2022-WSDM - Towards Robust Graph Neural Networks for Noisy Graphs with Sparse Labels. 2022-Arxiv - Multi-class Label Noise Learning via Loss Decomposition and Centroid Estimation. Meta-learning from noisy labels :: Päpper's Machine Learning Blog ... MNIST itself is not a very noisy dataset, so first, let's add a lot of noise and get our noisy and clean set. We'll create 80% noise, so 80% of our labels will be changed to some random other class. For the clean set, we'll keep 50 examples per class, so a tiny portion of our data. GitHub - cleanlab/cleanlab: The standard data-centric AI … # Generate noisy labels using the noise_marix. Guarantees exact amount of noise in labels. from cleanlab. benchmarking. noise_generation import generate_noisy_labels s_noisy_labels = generate_noisy_labels (y_hidden_actual_labels, noise_matrix) # This package is a full of other useful methods for learning with noisy labels. How Noisy Labels Impact Machine Learning Models | iMerit Supervised Machine Learning requires labeled training data, and large ML systems need large amounts of training data. Labeling training data is resource intensive, and while techniques such as crowd sourcing and web scraping can help, they can be error-prone, adding 'label noise' to training sets.

[P] Noisy Labels and Label Smoothing : MachineLearning - reddit My best guess that this 'label smoothing' thing isn't going to change the optimal classification boundary at all (in a maximum-likelihood sense) if the "smoothing" is symmetrical wrt. the labels, and even the non-symmetric case can be addressed in a rather more straightforward way, simply by adjusting the weight of more "uncertain" points in the dataset. Deep Learning: Dealing with noisy labels | by Tarun B | Medium Adding a noise layer over the base model in deep learning. This noise layer will learn the transition between clean labels and bad labels. Essentially, we want the noise layer or noise model to... Machine learning - Wikipedia Machine learning (ML) ... In weakly supervised learning, the training labels are noisy, limited, or imprecise; however, these labels are often cheaper to obtain, resulting in larger effective training sets. Reinforcement learning. Reinforcement learning is an area of ... Removing Label Noise for Machine Learning applications Of course, many machine learning algorithms can handle noisy training data inputs (for example Random Forest), but too much noise will be regarded as an actual information-bearing sample which will be learned by the algorithm and the inference on another dataset (with no noise or other, arbitrary noise) will fail as well.

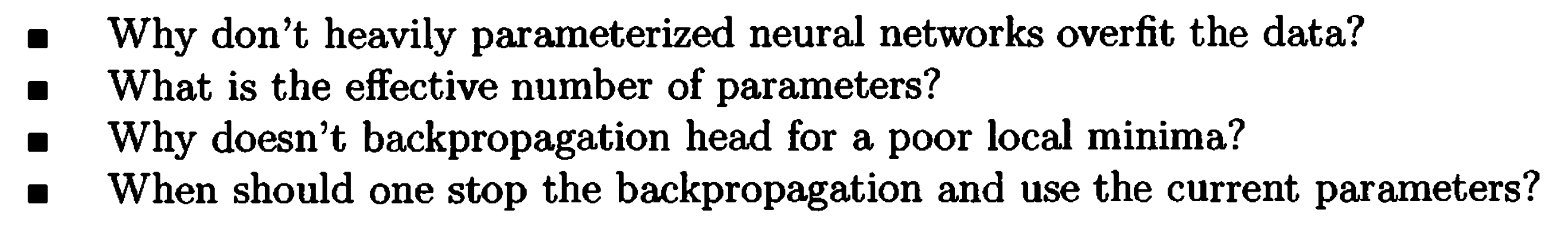

Understanding Deep Learning on Controlled Noisy Labels In "Beyond Synthetic Noise: Deep Learning on Controlled Noisy Labels", published at ICML 2020, we make three contributions towards better understanding deep learning on non-synthetic noisy labels. First, we establish the first controlled dataset and benchmark of realistic, real-world label noise sourced from the web (i.e., web label noise ... Learning from Noisy Labels with Deep Neural Networks: A Survey As noisy labels severely degrade the generalization performance of deep neural networks, learning from noisy labels (robust training) is becoming an important task in modern deep learning applications. In this survey, we first describe the problem of learning with label noise from a supervised learning perspective. A Gentle Introduction to Bayes Theorem for Machine Learning Dec 04, 2019 · A machine learning algorithm or model is a specific way of thinking about the structured relationships in the data. ... high dimension, noisy, and computationally expensive to evaluate. ... Regression and Classification are supervise learning and we have labels. An Introduction to Classification Using Mislabeled Data The basic steps are: train a bunch of classifiers using a subset of training data, predict the labels of the rest of the data using them, and then the percentage of classifiers that failed to correctly predict a sample's given label is the probability that the sample is mislabeled.

How Noisy Labels Impact Machine Learning Models - KDnuggets While this study demonstrates that ML systems have a basic ability to handle mislabeling, many practical applications of ML are faced with complications that make label noise more of a problem. These complications include: Not being able to create very large training sets, and Systematic labeling errors that confuse machine learning.

Noisy Labels: Theoretical Approaches/Empirical Studies We demonstrate that several proposed learning-with-noisy-labels solutions in the literature relate closely to negative label smoothing (NLS), which defines as using a negative weight to combine the hard and soft labels. We unify (positive) LS and NLS into GLS, and provide understandings for the properties of GLS when learning with noisy labels.

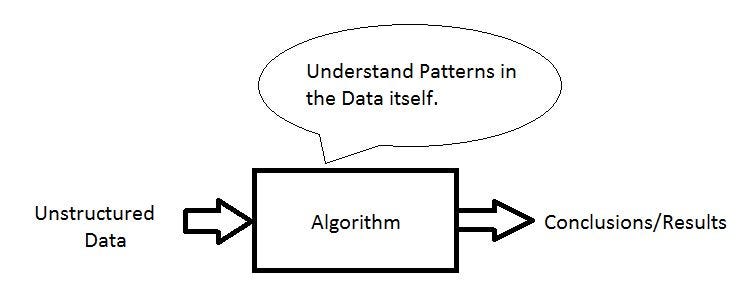

Machine Learning Algorithm - an overview | ScienceDirect Topics Machine Learning Algorithm. An ML algorithm, which is a part of AI, uses an assortment of accurate, probabilistic, and upgraded techniques that empower computers to pick up from the past point of reference and perceive hard-to-perceive patterns from massive, noisy, or …

github.com › cleanlab › cleanlabGitHub - cleanlab/cleanlab: The standard data-centric AI ... # Generate noisy labels using the noise_marix. Guarantees exact amount of noise in labels. from cleanlab. benchmarking. noise_generation import generate_noisy_labels s_noisy_labels = generate_noisy_labels (y_hidden_actual_labels, noise_matrix) # This package is a full of other useful methods for learning with noisy labels.

github.com › Advances-in-Label-Noise-LearningGitHub - weijiaheng/Advances-in-Label-Noise-Learning: A ... Jun 15, 2022 · Learning from Noisy Labels via Dynamic Loss Thresholding. Evaluating Multi-label Classifiers with Noisy Labels. Self-Supervised Noisy Label Learning for Source-Free Unsupervised Domain Adaptation. Transform consistency for learning with noisy labels. Learning to Combat Noisy Labels via Classification Margins.

Weakly Supervised Learning: Classification with limited annotation capacity | by Ved Vasu Sharma ...

Towards harnessing feature embedding for robust learning with noisy labels The memorization effect of deep neural networks (DNNs) plays a pivotal role in recent label noise learning methods. To exploit this effect, the model prediction-based methods have been widely adopted, which aim to exploit the outputs of DNNs in the early stage of learning to correct noisy labels. However, we observe that the model will make mistakes during label prediction, resulting in ...

Different types of Machine learning and their types. | by Madhu Sanjeevi ( Mady ) | Deep Math ...

How to handle noisy labels for robust learning from uncertainty Most deep neural networks (DNNs) are trained with large amounts of noisy labels when they are applied. As DNNs have the high capacity to fit any noisy labels, it is known to be difficult to train DNNs robustly with noisy labels. These noisy labels cause the performance degradation of DNNs due to the memorization effect by over-fitting.

Learning with Noisy Labels via Sparse Regularization Xiong Zhou, Xianming Liu, Chenyang Wang, Deming Zhai, Junjun Jiang, Xiangyang Ji Learning with noisy labels is an important and challenging task for training accurate deep neural networks. Some commonly-used loss functions, such as Cross Entropy (CE), suffer from severe overfitting to noisy labels.

Post a Comment for "40 machine learning noisy labels"